Serverless computing is all the rage these days. Unlike what you might think, serverless doesn’t mean that literally there will be no servers, it just means that you won’t have to think about them. They will still be running in the background but infrastructure will be managed as code so you don’t need to SSH into a server to install and update software.

Some serverless platforms can be somewhat limiting, for example, Amazon’s AWS Lambda uses a proprietary runtime so you can’t easily run an existing application on it. However, there are also more flexible platforms like Heroku, AWS Elastic Beanstalk, and Google Cloud App Engine which let you run your existing applications written in a language like Python, PHP, or Ruby in a containerized environment.

Some platforms allow custom Dockerfiles to be run, this means you can run almost any code you want. Sure, you could learn to use Kubernetes, but these platforms make it easy to deploy and scale applications without miles of YAML files and hours and hours of learning how Kubernetes works.

DigitalOcean is the latest cloud hosting firm to join the serverless race with its new App Platform. Can their new platform live up to the lofty promises and replace their existing Droplet VPS offering? After trying out the platform for almost a year now, here are my honest experiences. Let’s start with the good things first.

The good

Integrated CI/CD

Getting started is easy. Just add a new app and point to Git repository on GitHub or GitLab and App Platform will take it from there. If you have a Dockerfile in the repo, the application will be automatically detected as a Docker application. If not, the App Platform will look at your code and detect the appropriate Heroku buildpack it will use. App Platform will then build your app and create an URL like https://my-app-arb1y.ondigitalocean.app for it. If there are some additional commands you need to use when building and running the app, they can be configured in the App Platform settings.

Zero Downtime deployments

One really nice feature is the atomic, zero-downtime deployments. After a deployment is ready, the App Platform will ping your application to make sure it’s healthy. If an error occurs during running the deployment commands or the application doesn’t respond to the health check, App Platform will roll back the application to its previous version. This gives you peace of mind that your application is unlikely to end up in a broken state after deployment.

The App Platform sends you email every time a deployment fails. Zero Downtime deployment means the working build of your app keeps running despite the error.

User interface

Like all DigitalOcean products, the App Platform user interface is really clear and easy to use. It’s a fresh breath of air compared to Amazon’s and Google’s cloud offering. Everything can be done in a few clicks. While someone working in a corporate environment may disagree, I find that’s it’s a plus you don’t need to manage “IAM policies” in order to have your app up and running. DigitalOcean has always marketed itself to the SME crowd anyways.

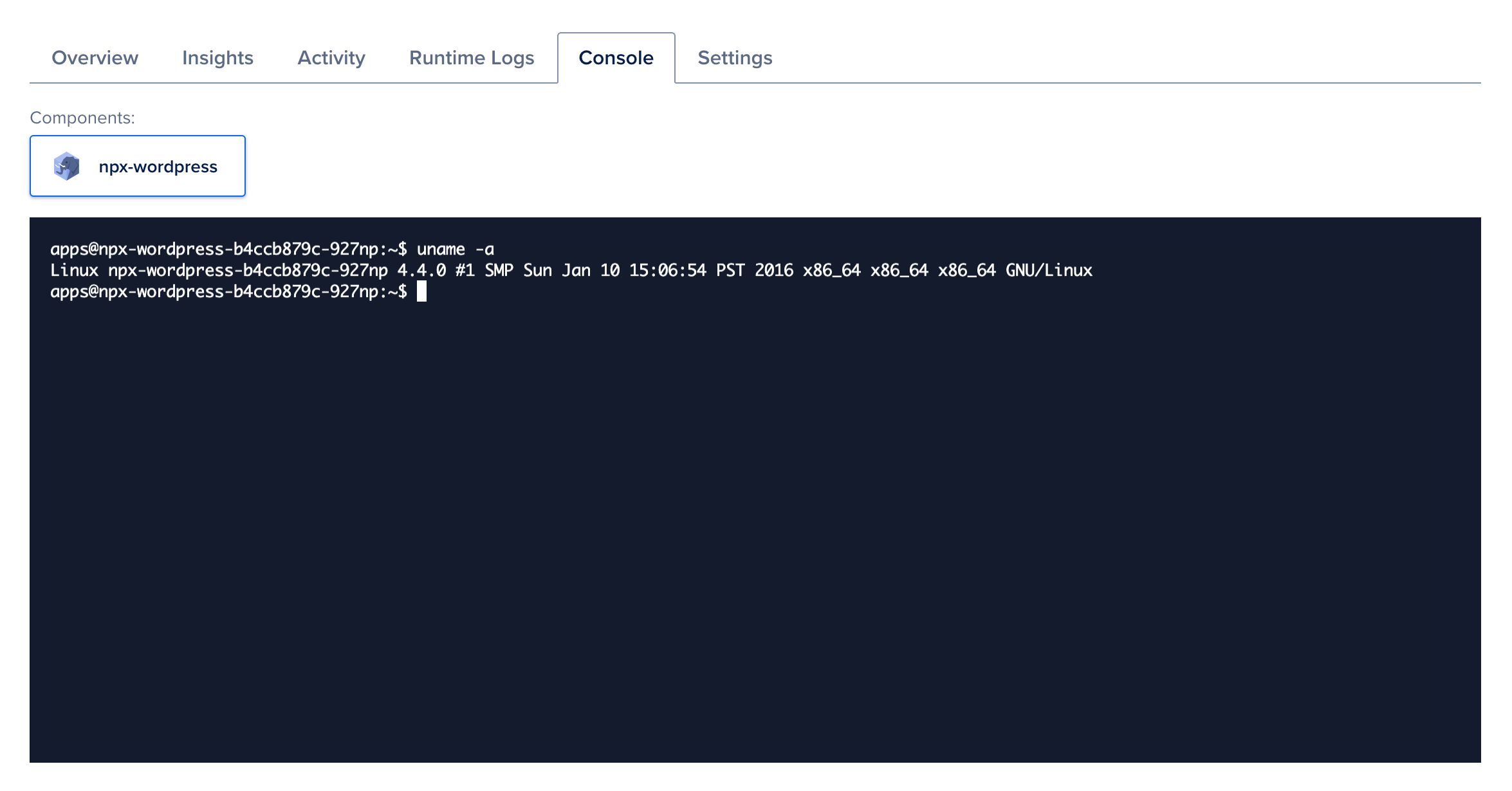

The console comes in handy when you need to run one-off commands inside the container

However, there seem to be some teething issues with the UI. The user interface sometimes goes out of sync and shows information from a wrong/previous deployment. I’ve also noticed that the shell times out really quickly if you don’t use it and it seems that on Windows, you can’t copy-paste anything from the terminal while on Mac it works just fine.

Databases

App Platform integrates well with DigitalOcean Managed Databases service. App platform allows you to link a managed database to your application. Once you select the managed database, database name, and user, the App Platform connects them together and adds environment variables automatically so your application can connect to the database. MySQL, PostgreSQL, and MongoDB are supported. In addition to connecting an existing managed database, there’s also an option to create “dev databases” which have 512 megs of RAM and 1Gb disk which might be useful in some cases.

URL and domain management

By default, every App Platform app has an URL in the form of https://my-app-arb1y.ondigitalocean.app . You can also configure a domain to point to the app, either manually by configuring DNS records, or you can let DigitalOcean manage the DNS records for you. HTTPS is automatically supported via the Cloudflare CDN so you don’t need to do any extra work to configure TSL certificates.

In addition to having an app running in the root, you can also configure additional apps to run on subfolders, like https://my-app-arb1y.ondigitalocean.app/app-1 and https://my-app-arb1y.ondigitalocean.app/app-2 . Apps don’t need to be externally accessible. It is not supported in the UI, but you can remove any external routes using the YAML configuration and only expose internal ports to other components running in the same app. In your code, you can access these apps using the hostname and a port.

App Platform’s URL management is great since it simplifies the repetitive task of managing DNS records and TLS certificates by hand.

CDN

The App Platform is automatically integrated with CloudFlare CDN out of the box so you don’t need an additional CDN which is nice.

Storage

Given its serverless nature, App Platform strongly discourages using the local filesystem for storing files, unless doing so temporarily. The filesystem is ephemeral and every time you deploy the app, all the files will be lost. You will either need to store the data in the database or use a storage service like S3 or DigitalOcean’s own S3 compatible DigitalOcean Spaces product. DigitalOcean Block Storage is unfortunately not supported, at least yet.

Scaling

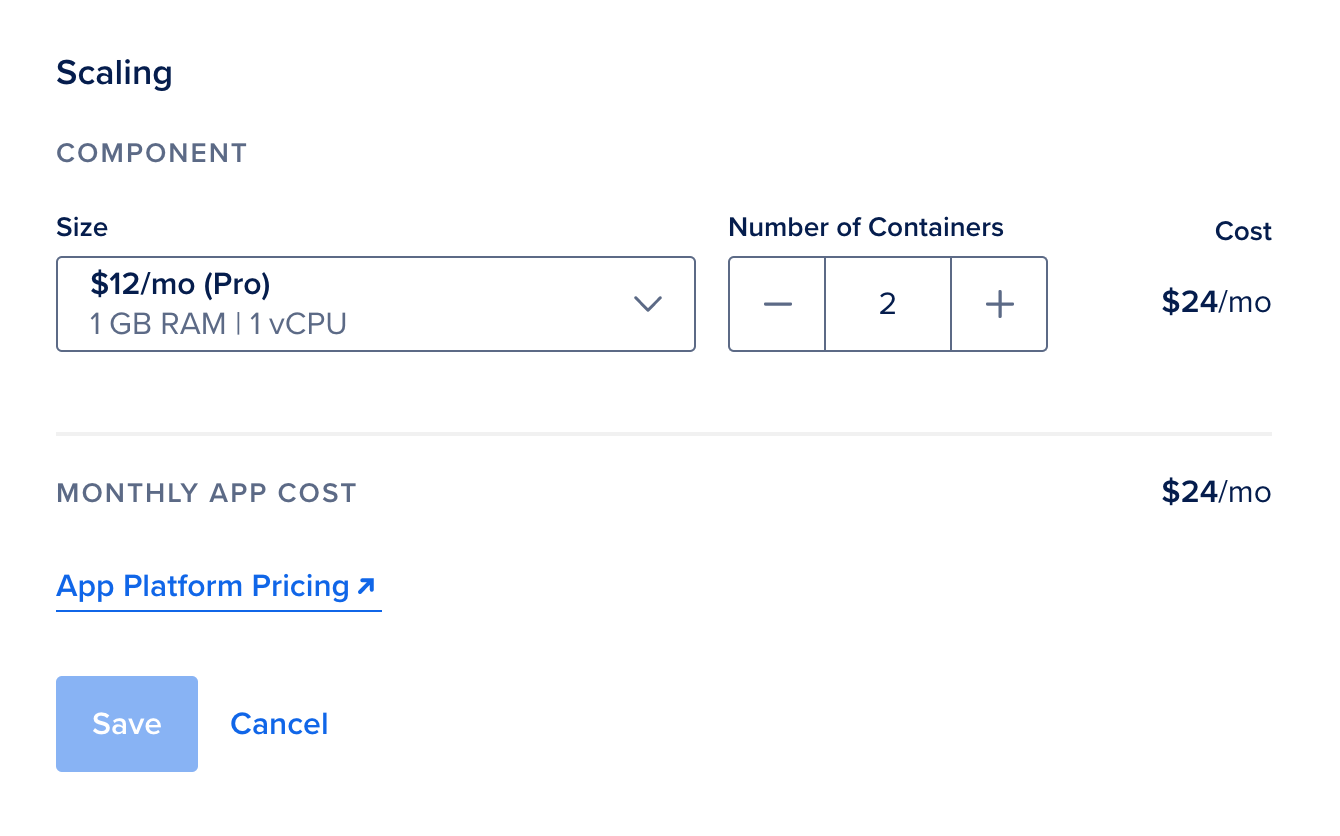

It’s easy to scale applications horizontally by selecting a container with more CPU threads and memory or vertically by running several containers side-by-side. The App Platform automatically load-balances HTTP requests between horizontally scaled containers which makes implementing horizontal scaling a breeze.

Scaling is easy, you can select the container type and scale out the number of containers. However App Platform doesn’t support automatic scaling yet.

One thing still missing is support for automatic scaling, which would take metrics such as CPU, memory use, or request latency into account and automatically scale the number of containers depending on the workload. I would imagine DigitalOcean adding this feature at some point in the future as rivals like Heroku and Google Cloud offer this already.

The bad

So far, the App Platform seems to be a great choice for running your web applications. But there’s one huge caveat.

Performance

To put it simply, the App Platform performance is bad. And I mean really bad. I’ve been trying to find ways to improve the App Platform performance for the past year or so and I haven’t found a way to make it as fast as a simple DigitalOcean VPS droplet. The performance is even worse than my local development environment. I’ve tried everything: Nginx, Apache, reverse proxying, running the web server in a Docker container but there are always weird slowdowns and hangs and the App Platform never runs as fast as it should.

The worst part is that the issue is filesystem performance, also known as file I/O. You can upgrade the App Platform container to a beefy machine with gigabytes of RAM and dedicated CPU threads but it doesn’t help since the simple process of reading and writing files is terribly slow which causes your app to grind to halt.

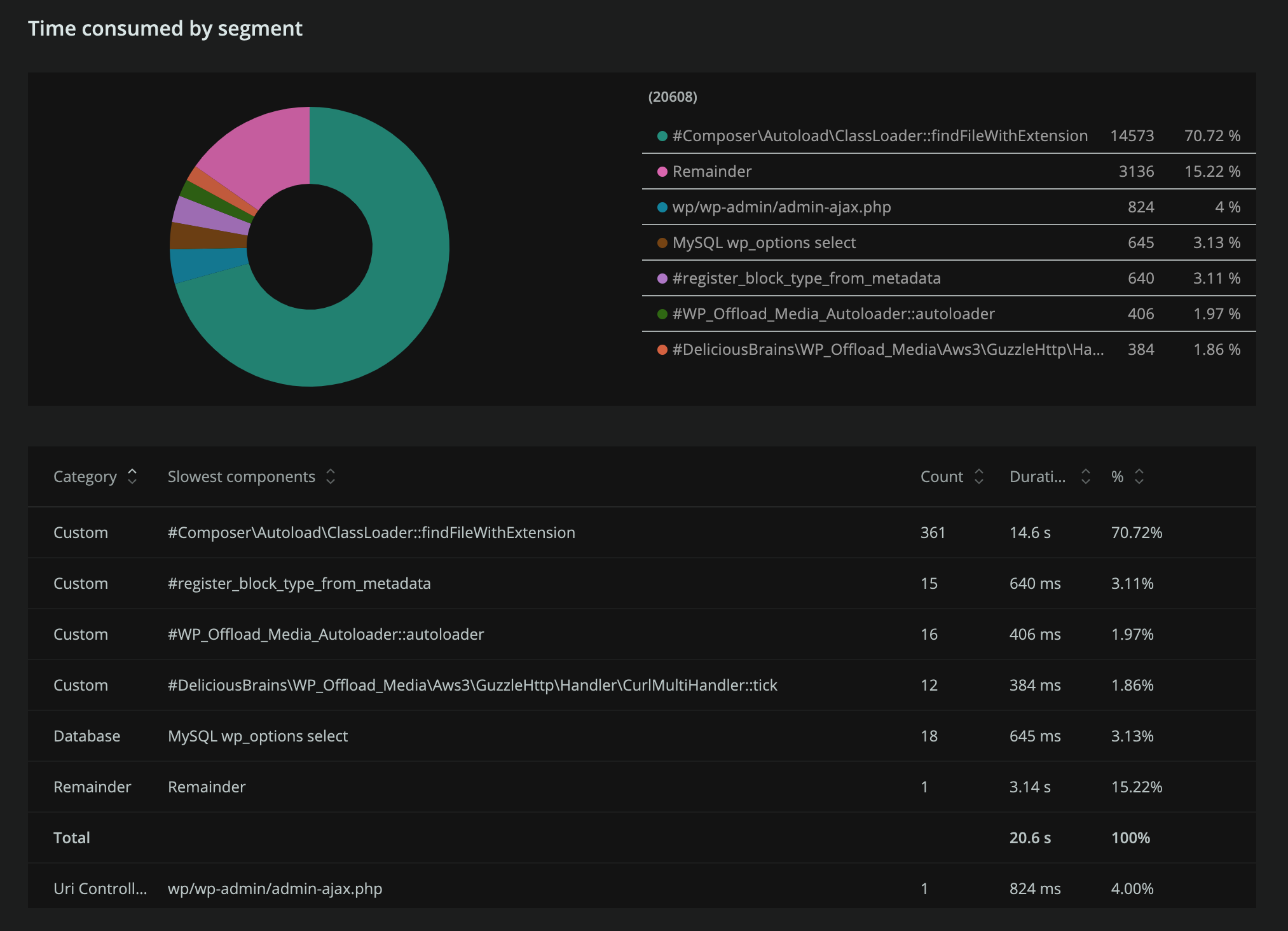

New Relic shows that the application spends 70% of its time in a method called Composer\Autoload\ClassLoader::findFileWithExtension. What does this method do? It checks if a file exists in the filesystem.

So, what’s causing all these issues? In my research, it seems like it’s a piece of software called gVisor. It’s a program made by Google which DigitalOcean uses to isolate App Platform containers for additional security.

Here’s DigitalOcean describing why they chose this technology:

We explored a few technologies around this area and settled on leveraging gVisor for isolation. You can think of gVisor as a “mini” kernel defined in user space. It implements a subset of all the system calls, /proc files, and so on that are supported, and it intercepts the actual system calls created by an application. It runs them in this sandboxed environment, only forwarding a very limited subset of these calls that it deems safe to the actual host kernel.

Another runtime solution we considered was Kata Containers. These are probably closer to the mental model you have of a cloud Virtual Machine. With Kata Containers, each container is wrapped with a lightweight virtual machine and its own kernel. Throughout our benchmarking, we determined that gVisor’s performance was a better fit for App Platforms needs.

End to end cloud native app builds and deployments with App Platform

The problem is that gVisor has really poor performance when dealing with file system operations. Google even admits this in their documentation.

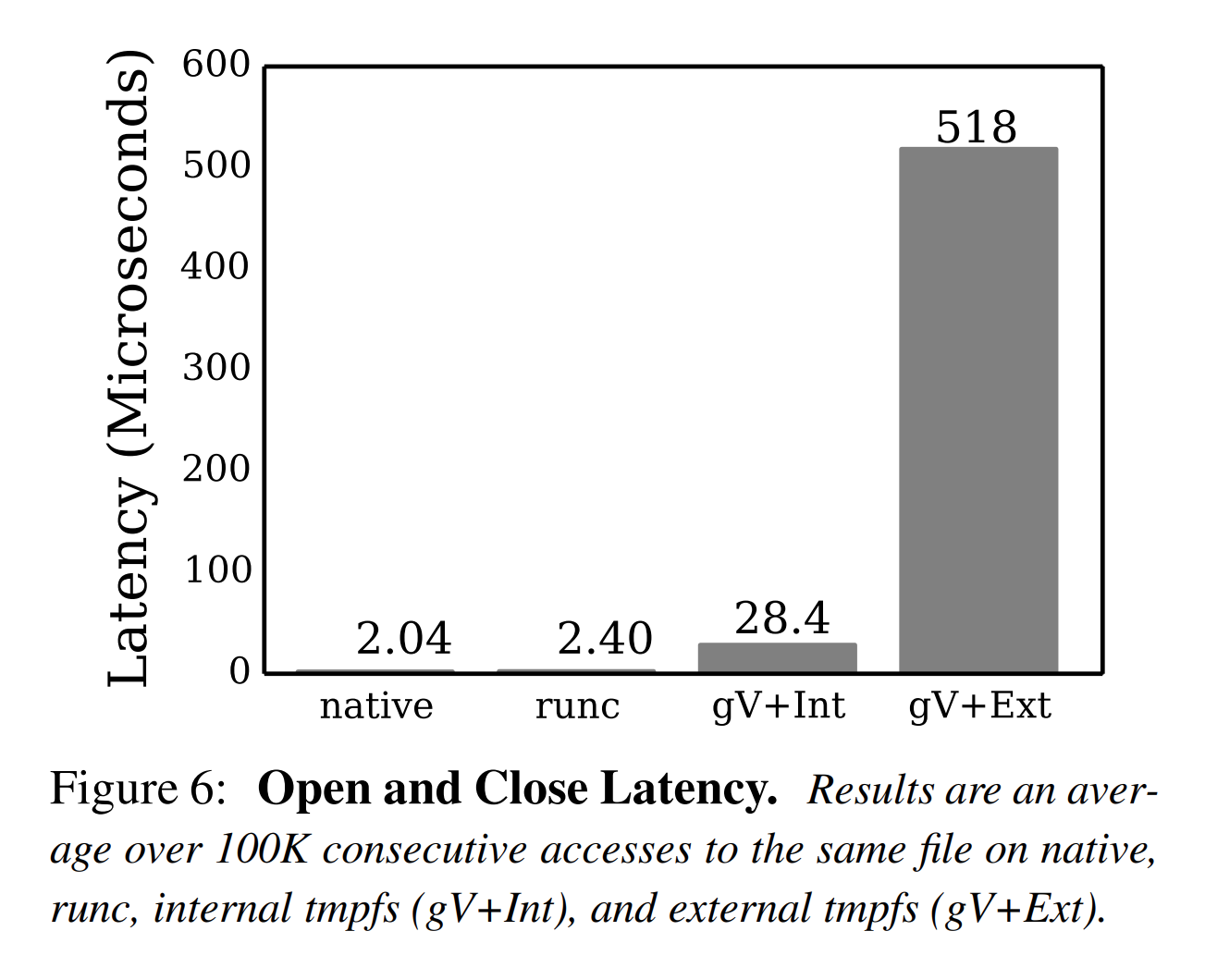

File system

Some aspects of file system performance are also reflective of implementation costs, and an area where gVisor’s implementation is improving quickly.

In terms of raw disk I/O, gVisor does not introduce significant fundamental overhead. For general file operations, gVisor introduces a small fixed overhead for data that transitions across the sandbox boundary. This manifests as structural costs in some cases, since these operations must be routed through the Gofer as a result of our Security Model, but in most cases are dominated by implementation costs, due to an internal Virtual File System (VFS) implementation that needs improvement.

Performance Guide, /gvisor.dev

The high costs of VFS operations can manifest in benchmarks that execute many such operations in the hot path for serving requests, for example. The above figure shows the result of using gVisor to serve small pieces of static content with predictably poor results. This workload represents

Performance Guide, /gvisor.devapacheserving a single file sized 100k from the container image to a client running ApacheBench with varying levels of concurrency. The high overhead comes principally from the VFS implementation that needs improvement, with several internal serialization points (since all requests are reading the same file). Note that some of some of network stack performance issues also impact this benchmark.

This doesn’t sound very promising. Here are some other articles and benchmarks I found online about gVisor performance:

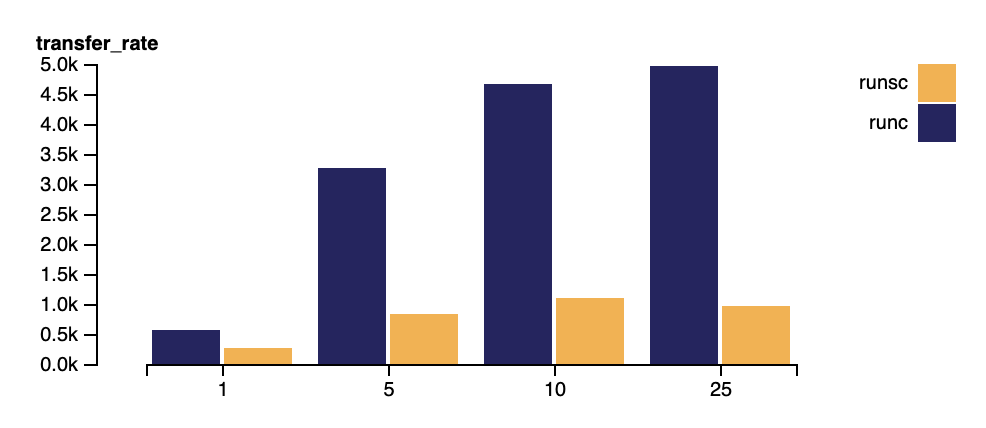

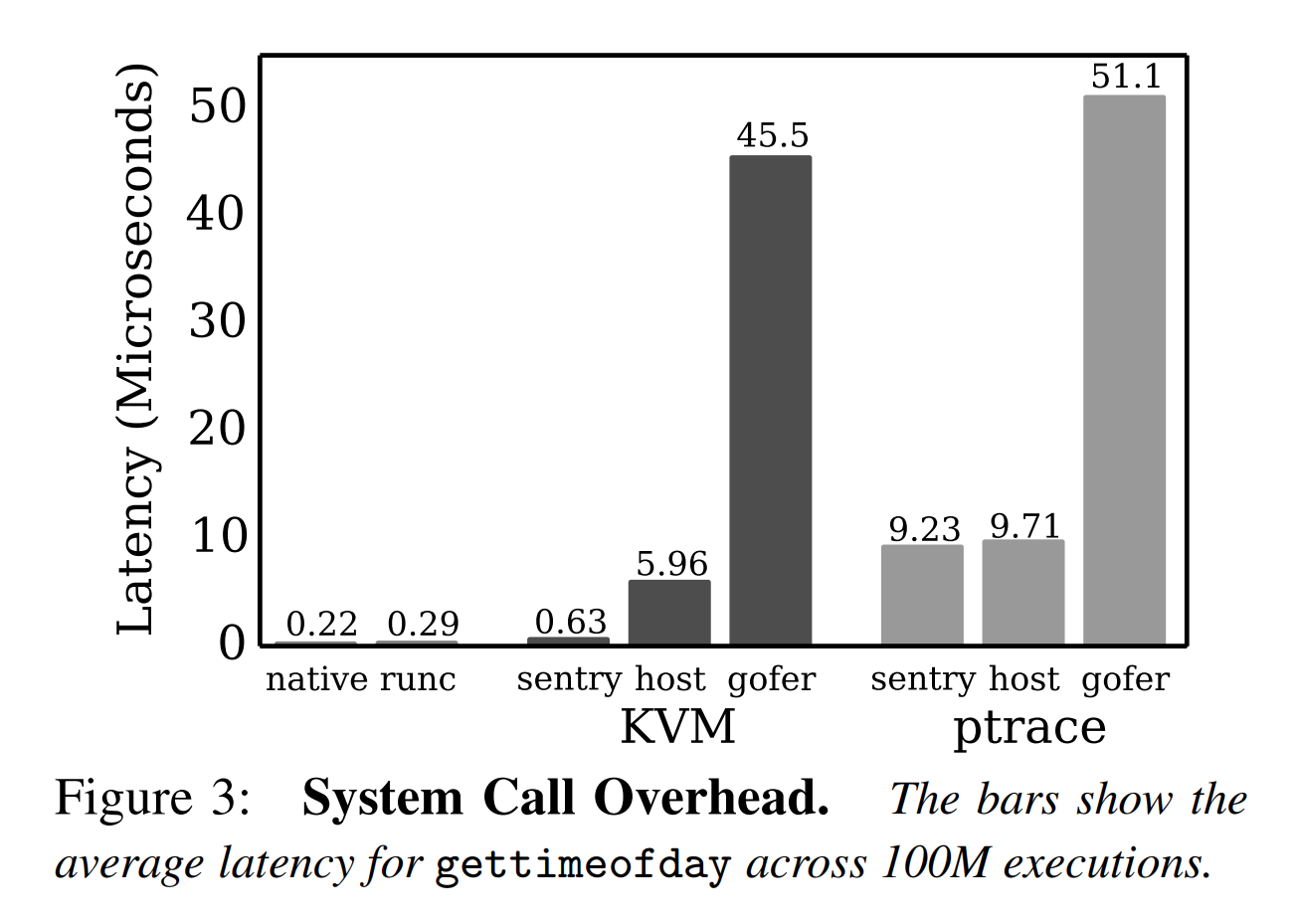

gVisor is arguably more secure than runc, as a compromised Sentry only gives an attacker access to a userspace process severely limited by seccomp filters, whereas a compromised Linux namespace or cgroup may give an attacker access to the host kernel. Unfortunately, our analysis shows that the true costs of effectively containing are high: system calls are 2.2× slower, memory allocations are 2.5× slower, large downloads are 2.8× slower, and file opens are 216× slower. We believe that bringing attention to these performance and scalability issues is the first step to building future container systems that are both fast and secure.

The True Cost of Containing: A gVisor Case Study

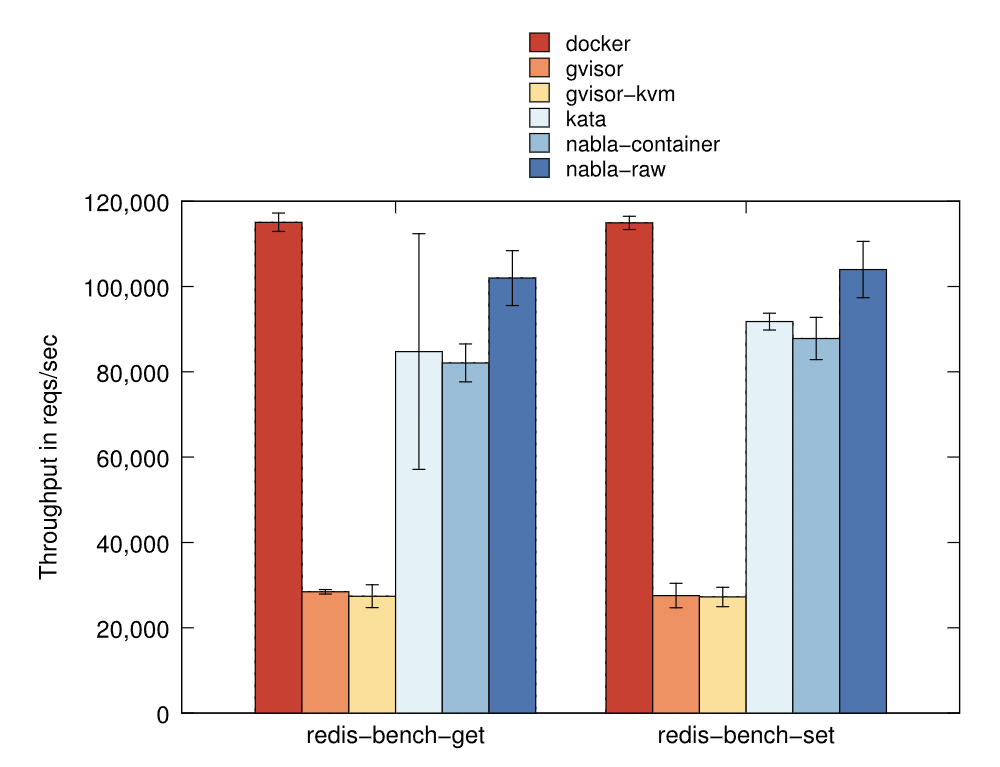

One final note is that, as expected, gVisor sucks because ptrace is a really inefficient way of connecting the syscalls to the sandbox. However, it is more surprising that gVisor-kvm (where the sandbox connects to the system calls of the container using hypercalls instead) is also pretty lacking in performance. I speculate this is likely because hypercalls exact their own penalty and hypervisors usually try to minimise them, which using them to replace system calls really doesn’t do.

Measuring the Horizontal Attack Profile of Nabla Containers

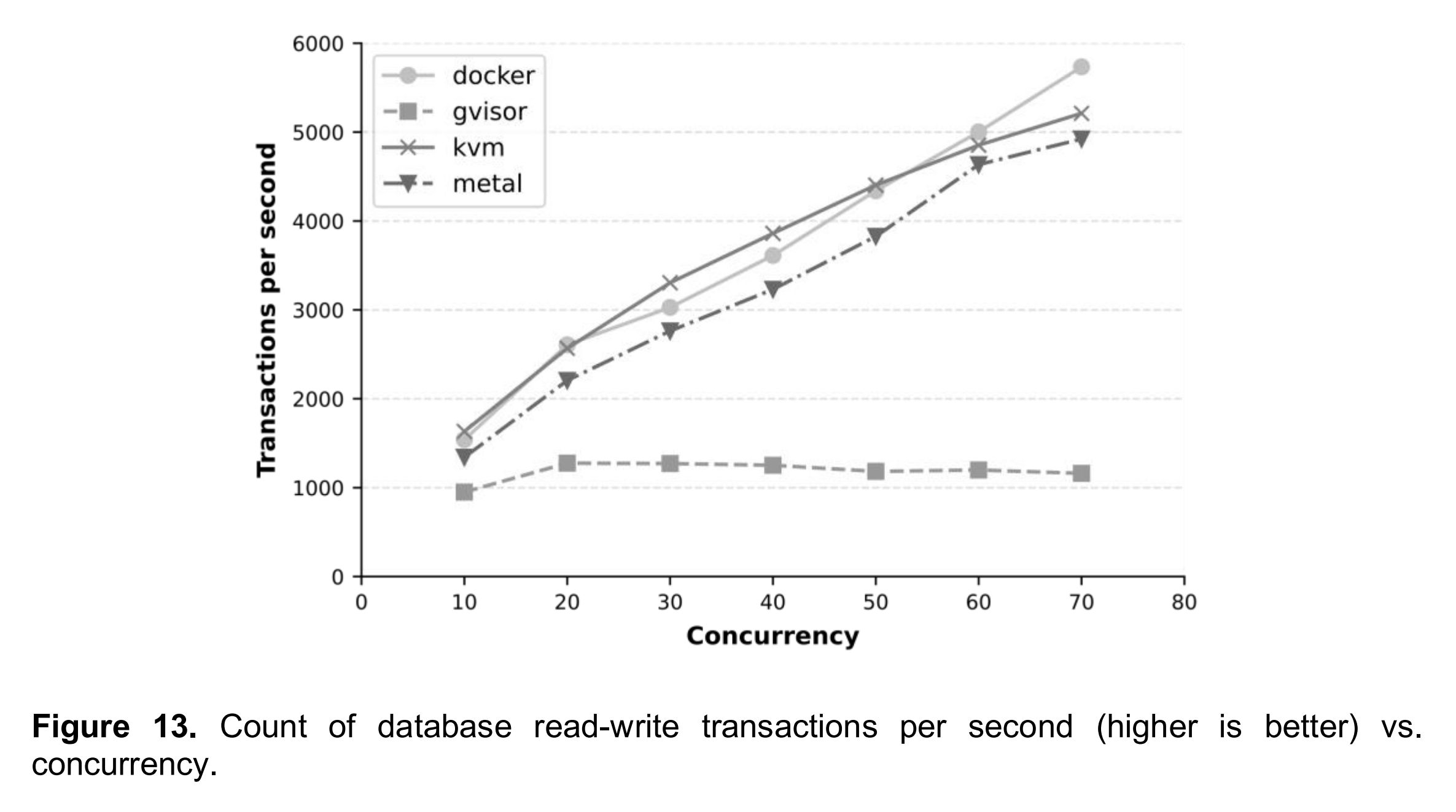

Sandboxed container runtime gVisor provided varying results. It was both capable of launching an instance and performing disk file reading almost as quickly as the default Docker runtime runc, but in many tests it was the worst performer. It was the worst in disk file writing, network bandwidth and CPU events, which means that the overhead of additional security is a concern in terms of performance. Moreover, the fact that gVisor was unable to get any points from the database benchmarking was unexpected result when considering that it received the same total score from low-level benchmarking than Firecracker did. On the other hand, because the database benchmarking was not conducted for Firecracker, it is not possible compare the two in that sense. One reason for the performance issues of gVisor, especially regarding the database benchmarking, might be caused by the default ptrace platform it uses. According to the documentation of gVisor (Google LLC, 2021c), “the ptrace platform has high context switch overhead, so system call-heavy applications may pay a performance penalty”. This can be mitigated by switching to the KVM platform that gVisor also supports, but testing beyond default configurations was out of scope in this research.

The optimal use-cases for gVisor are deployments which do not contain system callheavy applications and can leverage the OCI implementation of gVisor. Such environments can be, for example, Kubernetes clusters, which are running applications that control business logic without need for heavy I/O operations, such as media streaming or data storing on local file system. Another good use-case for gVisor could be, for example, arbitrary workloads that run untrusted code, such as in continuous integration pipelines. Using the runsc runtime instead of Docker’s default runc allows using the same container orchestration tools with extended security where it is needed. However, gVisor comes with limitations as well. It is not compatible with any arbitrary existing Docker image; for example, in this research, the installation of GitLab Server failed most likely due to failing system calls. In addition to that, similarly as Firecracker, gVisor is lacking support for hardware-accelerated GPUs (gVisor Contributors, 2018).

Evaluating Performance of Serverless Virtualization

Emphasis mine.

These are pretty scathing criticisms. It seems weird to me that DigitalOcean would choose to use a piece of software with “216x slower” filesystem performance when compared to Docker.

I can’t really recommend using the DigitalOcean App Platform in its current state due to its poor performance. DigitalOcean markets the platform as a general-purpose platform but from what I’ve read, the gVisor virtualization tool is not well suited for this since it performs so poorly with disk operations which are commonly used in web applications.

I don’t think the performance will be improving any time soon unless Google makes major improvements in the gVisor or DigitalOcean chooses the use another tool for the purpose of sandboxing containers.

All in all, I really wanted to like the DigitalOcean app platform. It has a great user interface. The build and deployment pipeline works really well and has atomic, zero-downtime deployments with automatic rollback. The pricing is fair compared to Heroku. But the performance issues are a dealbreaker for me.

I really do hope the App Platform team will be able to address this issue in the future since I think the platform has a solid base, and with improved performance, it could be a major contender among serverless hosting providers.

Addendum

I realize that much of the problems with gVisor and file system performance are specific to PHP. This is because every PHP request starts on a clean slate. The means the whole application is loaded from the filesystem every request. This exacerbates the issue with poor filesystem performance. If you are using a language where the whole application is loaded into memory once it’s started, you might run into issues with file I/O less frequently. It’s still important to be aware of these limitations on the App Platform if you plan to use it for your app.

If you want to run Docker containers in a serverless environment, I can highly recommend Google Cloud Run. I have migrated my blog from DigitalOcean App Platform to Cloud Run and it runs like a dream compared to the App Platform.

Funnily enough, Google also does use gVisor, at least when running a first-generation Cloud Run container. Despite this, they are somehow able to avoid the same performance issues that plague the DigitalOcean App Platform. I’m not entirely sure how they are able to do it. Maybe it’s because Google’s containers use a RAM disk instead of an SSD?

Google has not specified what technology they use for their second-generation containers, only that they offer “full Linux compatibility rather than the system call emulation provided by gVisor.”.